spark k8s 存算分离

官方文档记录一波

Running Spark on Kubernetes

https://spark.apache.org/docs/latest/running-on-kubernetes.html

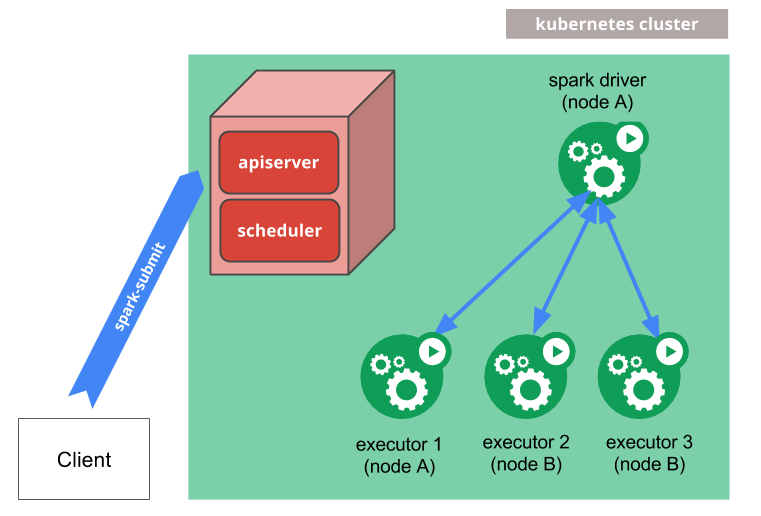

spark-submit can be directly used to submit a Spark application to a Kubernetes cluster. The submission mechanism works as follows:

- Spark creates a Spark driver running within a Kubernetes pod.

- The driver creates executors which are also running within Kubernetes pods and connects to them, and executes application code.

- When the application completes, the executor pods terminate and are cleaned up, but the driver pod persists logs and remains in “completed” state in the Kubernetes API until it’s eventually garbage collected or manually cleaned up.

Note that in the completed state, the driver pod does not use any computational or memory resources.

The driver and executor pod scheduling is handled by Kubernetes. Communication to the Kubernetes API is done via fabric8.

提交spark应用的时候, 需要提供docker镜像, spark repo里也提供了打包工具

Kubernetes requires users to supply images that can be deployed into containers within pods. The images are built to be run in a container runtime environment that Kubernetes supports.

构建基础的docker镜像

$ ./bin/docker-image-tool.sh -r <repo> -t my-tag build

To build additional PySpark docker image

$ ./bin/docker-image-tool.sh -r <repo> -t my-tag -p ./kubernetes/dockerfiles/spark/bindings/python/Dockerfile build

# To build additional SparkR docker image

$ ./bin/docker-image-tool.sh -r <repo> -t my-tag -R ./kubernetes/dockerfiles/spark/bindings/R/Dockerfile build

提交代码, 本地jar应用还是独立提供

To launch Spark Pi in cluster mode,

$ ./bin/spark-submit \

--master k8s://https://<k8s-apiserver-host>:<k8s-apiserver-port> \

--deploy-mode cluster \

--name spark-pi \

--class org.apache.spark.examples.SparkPi \

--conf spark.executor.instances=5 \

--conf spark.kubernetes.container.image=<spark-image> \

local:///path/to/examples.jar