ranger hive 外表表路径hdfs权限调研

看了各种源码, 还是需要动动手测试下才行, 不然压根不知道各种corner case是怎么回事. get your hands dirty.

测试结论:

- 创建外表且外表路径不存在, 需要最近有效上级hdfs路径的rwx权限(用于创建文件夹) + 当前路径的rwx权限(用于执行查询).

- 如果外表路径已经存在, 则只需要给外表路径full权限, 即可创建成功.

测试版本: 腾讯云EMR3.6.0: hdfs3.2.2 + ranger2.3.0

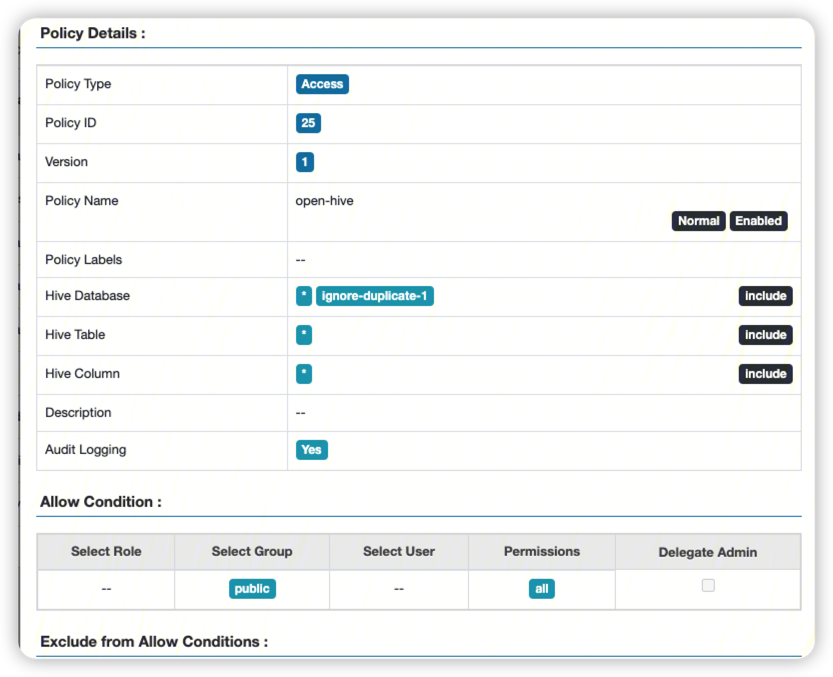

控制变量, 当前只是为了测试hdfs权限问题, 因此hive策略完全放开.

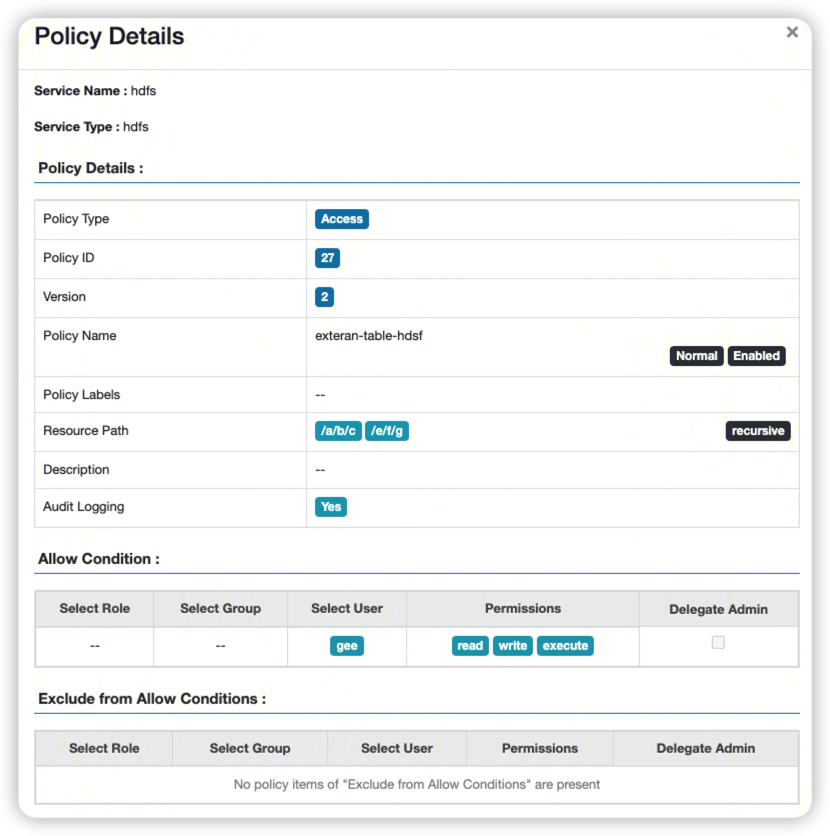

hdfs权限授予了/a/b/c路径.

使用kinit -kt登陆, 然后beeline连接. hive的principal来自hive的xml配置.

[root@tbds-172-16-16-11 ~]# klist -kt /tmp/gee.keytab

Keytab name: FILE:/tmp/gee.keytab

KVNO Timestamp Principal

---- ------------------- ------------------------------------------------------

1 08/02/2023 15:55:54 gee@TBDS-HBMGJTQZ

1 08/02/2023 15:55:54 gee@TBDS-HBMGJTQZ

[root@tbds-172-16-16-11 ~]# kinit -kt /tmp/gee.keytab gee

[root@tbds-172-16-16-11 ~]#

[root@tbds-172-16-16-11 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: gee@TBDS-HBMGJTQZ

Valid starting Expires Service principal

08/02/2023 15:58:14 08/03/2023 15:58:14 krbtgt/TBDS-HBMGJTQZ@TBDS-HBMGJTQZ

renew until 08/05/2023 15:58:14

beeline -u "jdbc:hive2://172.16.16.3:7001/default;principal=hadoop/172.16.16.3@EMR-1MSO7OJ3"

which: no hbase in (/root/.pyenv/bin:/usr/local/service/starrocks/bin:/data/Impala/shell:/usr/local/service/kudu/bin:/usr/local/service/tez/bin:/usr/local/jdk/bin:/usr/local/service/hadoop/bin:/usr/local/service/hive/bin:/usr/local/service/hbase/bin:/usr/local/service/spark/bin:/usr/local/service/storm/bin:/usr/local/service/sqoop/bin:/usr/local/service/kylin/bin:/usr/local/service/alluxio/bin:/usr/local/service/flink/bin:/data/Impala/bin:/usr/local/service/oozie/bin:/usr/local/service/presto/bin:/usr/local/service/slider/bin:/usr/local/service/kudu/bin:/usr/local/jdk//bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/service/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/service/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://172.16.16.3:7001/default;principal=hadoop/172.16.16.3@EMR-1MSO7OJ3

Connected to: Apache Hive (version 3.1.3)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.3 by Apache Hive

0: jdbc:hive2://172.16.16.3:7001/default>

0: jdbc:hive2://172.16.16.3:7001/default> select current_user();

+------+

| _c0 |

+------+

| gee |

+------+

1 row selected (3.372 seconds)

创建外表语句, 指定路径/a/b/c, 注意此时hdfs系统中没有/a路径.

[root@172 ~]# hdfs dfs -ls /

Found 8 items

drwxr-xr-x - hadoop supergroup 0 2023-08-11 13:56 /apps

drwxr-xr-x - hadoop supergroup 0 2023-08-11 13:56 /data

drwxrwx--- - hadoop supergroup 0 2023-08-11 13:56 /emr

drwxr-xr-x - hadoop supergroup 0 2023-08-11 13:56 /spark

drwxr-xr-x - hadoop supergroup 0 2023-08-11 13:56 /spark-history

drwx-wx-wx - hadoop supergroup 0 2023-08-11 13:57 /tmp

drwxr-xr-x - hadoop supergroup 0 2023-08-11 13:56 /user

drwxr-xr-x - gee supergroup 0 2023-08-11 17:00 /usr

CREATE EXTERNAL TABLE IF NOT EXISTS g002 (a int, b string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION '/a/b/c';

执行语句, 出现报错:

0: jdbc:hive2://172.16.16.3:7001/default> CREATE EXTERNAL TABLE IF NOT EXISTS g002 (a int, b string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION '/a/b/c';

Error: Error while compiling statement: FAILED: HiveAccessControlException Permission denied: user [gee] does not have [ALL] privilege on [hdfs://172.16.16.3:4007/a/b/c] (state=42000,code=40000)

0: jdbc:hive2://172.16.16.3:7001/default>

修改策略, 为/a/b/c的有效父级路径/授予rwx权限, 不需要recursive.

如果只是提供了x权限, 或者wx, 经测试仍然会报错.

提供上级有效父目录的rwx权限, 成功创建外表

0: jdbc:hive2://172.16.16.3:7001/default> CREATE EXTERNAL TABLE IF NOT EXISTS g002 (a int, b string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION '/a/b/c';

No rows affected (0.105 seconds)

0: jdbc:hive2://172.16.16.3:7001/default> show tables;

+-----------+

| tab_name |

+-----------+

| g001 |

| g002 |

+-----------+

2 rows selected (0.049 seconds)

注意, 如果此时把/a/b/c路径权限去掉, 无法选择

0: jdbc:hive2://172.16.16.3:7001/default> select * from g002;

Error: Error while compiling statement: FAILED: SemanticException Unable to determine if hdfs://172.16.16.3:4007/a/b/c is encrypted: org.apache.hadoop.ipc.RemoteException(org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException): Permission denied: user=gee, access=EXECUTE, inode="/a/b/c"

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkRangerPermission(RangerHdfsAuthorizer.java:501)

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:248)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:225)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:672)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1863)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1881)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.resolvePath(FSDirectory.java:694)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:112)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3215)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1294)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:830)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:529)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1039)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:963)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2065)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3047) (state=42000,code=40000)

父类的execute策略

经过测试, 如果外表路径为/a/b/c, 那么设置deny execution策略在/a/b/c上, 可以实现无法建表, 无法查询的效果. 如果只是配置在/a/b/上, 则无法实现. 可能因为对于表而言, 最近的父级目录, 其实反而是/a/b/c, 而不是/a/b.

0: jdbc:hive2://172.16.16.17:7001/default> select * from g002;

Error: Error while compiling statement: FAILED: SemanticException Unable to determine if hdfs://172.16.16.17:4007/a/b/c is encrypted: org.apache.hadoop.ipc.RemoteException(org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException): Permission denied: user=gee, access=EXECUTE, inode="/a/b/c"

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkRangerPermission(RangerHdfsAuthorizer.java:501)

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:248)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:225)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:672)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1863)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1881)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.resolvePath(FSDirectory.java:694)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:112)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3215)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1294)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:830)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:529)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1039)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:963)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2065)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3047) (state=42000,code=40000)

created at 2023-08-11