kyuubi 测试

kyuubi beeline连接与 hive beeline差不多, principal来自kyuubi自身的配置.

beeline -u "jdbc:hive2://172.16.16.14:10009/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT"

kyuubi kyuubi-defaults.conf中的beeline principal配置:

key:kyuubi.kinit.principal

value: hadoop/_HOST@EMR-NJ10EACT

对比同个集群 hive beeline的连接, principal则是来自hive的xml配置

beeline -u "jdbc:hive2://172.16.16.14:7001/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT"

kerberos 登录

[root@172 ~]# ls

emr-nj10eact_gee.keytab ldap.stash updateForTemrfs.sh

[root@172 ~]# klist -kt emr-nj10eact_gee.keytab

Keytab name: FILE:emr-nj10eact_gee.keytab

KVNO Timestamp Principal

---- ------------------- ------------------------------------------------------

1 08/24/2023 20:21:06 gee@EMR-NJ10EACT

[root@172 ~]# kinit -kt emr-nj10eact_gee.keytab gee@EMR-NJ10EACT

[root@172 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: gee@EMR-NJ10EACT

Valid starting Expires Service principal

08/24/2023 21:01:04 08/25/2023 09:01:04 krbtgt/EMR-NJ10EACT@EMR-NJ10EACT

renew until 08/31/2023 21:01:04

测试集群:

- 腾讯云 emr-3.6.0

- hive-3.1.3; spark-3.3.2; ranger-2.3.0; kyuubi-1.7.0

看起来默认的kyuubi, 没有安装spark-authz这个ranger插件.

[root@172 ~]# cd /usr/local/service/

[root@172 /usr/local/service]# ls

apps filebeat hadoop hive hue iceberg knox kyuubi ranger scripts slider spark tez woodpecker zookeeper

[root@172 /usr/local/service]# ls kyuubi/

beeline-jars bin charts conf docker extension externals jars LICENSE licenses logs NOTICE pid RELEASE work

[root@172 /usr/local/service]# ls kyuubi/extension/

kyuubi-extension-spark-3-2_2.12-1.7.0.jar

[root@172 /usr/local/service]# ls kyuubi/externals/

apache-hive-3.1.3-bin engines

[root@172 /usr/local/service]# ls kyuubi/externals/engines/

flink hive jdbc spark trino

kyuubi beeline 连接测试

新集群没有放开ranger权限的时候, 首次kyuubi登录会报, 可能哪些hive初始化未完成. 需要先放开ranger权限, beeline hive先登录初始化后再进行操作.

[root@172 ~]# beeline -u "jdbc:hive2://172.16.16.14:10009/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT"

which: no hbase in (/usr/local/service/starrocks/bin:/data/Impala/shell:/usr/local/service/kudu/bin:/usr/local/service/tez/bin:/usr/local/jdk/bin:/usr/local/service/hadoop/bin:/usr/local/service/hive/bin:/usr/local/service/hbase/bin:/usr/local/service/spark/bin:/usr/local/service/storm/bin:/usr/local/service/sqoop/bin:/usr/local/service/kylin/bin:/usr/local/service/alluxio/bin:/usr/local/service/flink/bin:/data/Impala/bin:/usr/local/service/oozie/bin:/usr/local/service/presto/bin:/usr/local/service/slider/bin:/usr/local/service/kudu/bin:/usr/local/jdk//bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/service/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/service/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Connecting to jdbc:hive2://172.16.16.14:10009/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT

Error: org.apache.kyuubi.KyuubiSQLException: Timeout(180000 ms, you can modify kyuubi.session.engine.initialize.timeout to change it) to launched SPARK_SQL engine with /usr/local/service/spark/bin/spark-submit \

--class org.apache.kyuubi.engine.spark.SparkSQLEngine \

--conf spark.hive.server2.thrift.resultset.default.fetch.size=1000 \

--conf spark.kyuubi.backend.engine.exec.pool.size=100 \

--conf spark.kyuubi.backend.engine.exec.pool.wait.queue.size=100 \

--conf spark.kyuubi.backend.server.exec.pool.size=100 \

--conf spark.kyuubi.backend.server.exec.pool.wait.queue.size=100 \

--conf spark.kyuubi.client.ipAddress=172.16.16.14 \

--conf spark.kyuubi.engine.credentials=SERUUwABETE3Mi4xNi4xNi4xNDo0MDA3OgADZ2VlA2dlZSBoYWRvb3AvMTcyLjE2LjE2LjE0QEVN

Ui1OSjEwRUFDVIoBiieE2MWKAYpLkVzFAQIUOo3DvNkaxV6eBYhcqnPAme64XSIVSERGU19ERUxF

R0FUSU9OX1RPS0VOETE3Mi4xNi4xNi4xNDo0MDA3AA== \

--conf spark.kyuubi.engine.operation.log.dir.root=/data/emr/kyuubi/logs/engine_operation_logs \

--conf spark.kyuubi.engine.share.level=USER \

--conf spark.kyuubi.engine.submit.time=1692880725170 \

--conf spark.kyuubi.frontend.thrift.max.worker.threads=999 \

--conf spark.kyuubi.frontend.thrift.min.worker.threads=9 \

--conf spark.kyuubi.ha.addresses=172.16.16.14:2181 \

--conf spark.kyuubi.ha.engine.ref.id=1bccdf57-a8c4-43bb-8f16-7c93f697f2d6 \

--conf spark.kyuubi.ha.namespace=/kyuubi_1.7.0_USER_SPARK_SQL/gee/default \

--conf spark.kyuubi.metrics.enabled=true \

--conf spark.kyuubi.metrics.prometheus.port=10019 \

--conf spark.kyuubi.metrics.reporters=PROMETHEUS \

--conf spark.kyuubi.server.ipAddress=172.16.16.14 \

--conf spark.kyuubi.session.connection.url=172.16.16.14:10009 \

--conf spark.kyuubi.session.engine.idle.timeout=PT30M \

--conf spark.kyuubi.session.engine.initialize.timeout=PT3M \

--conf spark.kyuubi.session.engine.login.timeout=PT15S \

--conf spark.kyuubi.session.engine.open.max.attempts=9 \

--conf spark.kyuubi.session.engine.open.retry.wait=PT10S \

--conf spark.kyuubi.session.engine.startup.waitCompletion=true \

--conf spark.kyuubi.session.real.user=gee \

--conf spark.app.name=kyuubi_USER_SPARK_SQL_gee_default_1bccdf57-a8c4-43bb-8f16-7c93f697f2d6 \

--conf spark.master=yarn \

--conf spark.yarn.tags=KYUUBI,1bccdf57-a8c4-43bb-8f16-7c93f697f2d6 \

--proxy-user gee /usr/local/service/kyuubi/externals/engines/spark/kyuubi-spark-sql-engine_2.12-1.7.0.jar. (false,APPLICATION_NOT_FOUND) (state=,code=0)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.3 by Apache Hive

0: jdbc:hive2://172.16.16.14:10009/default> show databases;

Error: org.apache.kyuubi.KyuubiSQLException: Timeout(180000 ms, you can modify kyuubi.session.engine.initialize.timeout to change it) to launched SPARK_SQL engine with /usr/local/service/spark/bin/spark-submit \

开启所有ranger权限 hdfs/hive/yarn, 然后kyuubi正确登录

[root@172 ~]# beeline -u "jdbc:hive2://172.16.16.14:10009/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT"

Connecting to jdbc:hive2://172.16.16.14:10009/default;principal=hadoop/172.16.16.14@EMR-NJ10EACT

Connected to: Spark SQL (version 3.3.2)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.3 by Apache Hive

0: jdbc:hive2://172.16.16.14:10009/default> show databases;

23/08/24 20:47:05 INFO credentials.HadoopCredentialsManager: Send new credentials with epoch 0 to SQL engine through session 395b02d0-0042-4373-a98d-b756f8f82e55

23/08/24 20:47:05 INFO credentials.HadoopCredentialsManager: Update session credentials epoch from -1 to 0

23/08/24 20:47:05 INFO operation.ExecuteStatement: Processing gee's query[f918f99b-be0d-4378-ab94-235dea7fe44c]: PENDING_STATE -> RUNNING_STATE, statement:

show databases

23/08/24 20:47:05 INFO operation.ExecuteStatement: Query[f918f99b-be0d-4378-ab94-235dea7fe44c] in FINISHED_STATE

23/08/24 20:47:05 INFO operation.ExecuteStatement: Processing gee's query[f918f99b-be0d-4378-ab94-235dea7fe44c]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.146 seconds

+------------+

| namespace |

+------------+

| default |

+------------+

1 row selected (0.423 seconds)

0: jdbc:hive2://172.16.16.14:10009/default> select current_user() ;

23/08/24 20:47:55 INFO credentials.HadoopCredentialsManager: Send new credentials with epoch 0 to SQL engine through session b708ef7a-5f59-4b4b-af4b-ebf1ee0ce95d

23/08/24 20:47:55 INFO credentials.HadoopCredentialsManager: Update session credentials epoch from -1 to 0

23/08/24 20:47:55 INFO operation.ExecuteStatement: Processing gee's query[78583ada-9b38-4d65-86f0-05f54f975304]: PENDING_STATE -> RUNNING_STATE, statement:

select current_user()

23/08/24 20:47:55 INFO operation.ExecuteStatement: Query[78583ada-9b38-4d65-86f0-05f54f975304] in FINISHED_STATE

23/08/24 20:47:55 INFO operation.ExecuteStatement: Processing gee's query[78583ada-9b38-4d65-86f0-05f54f975304]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.304 seconds

+-----------------+

| current_user() |

+-----------------+

| gee |

+-----------------+

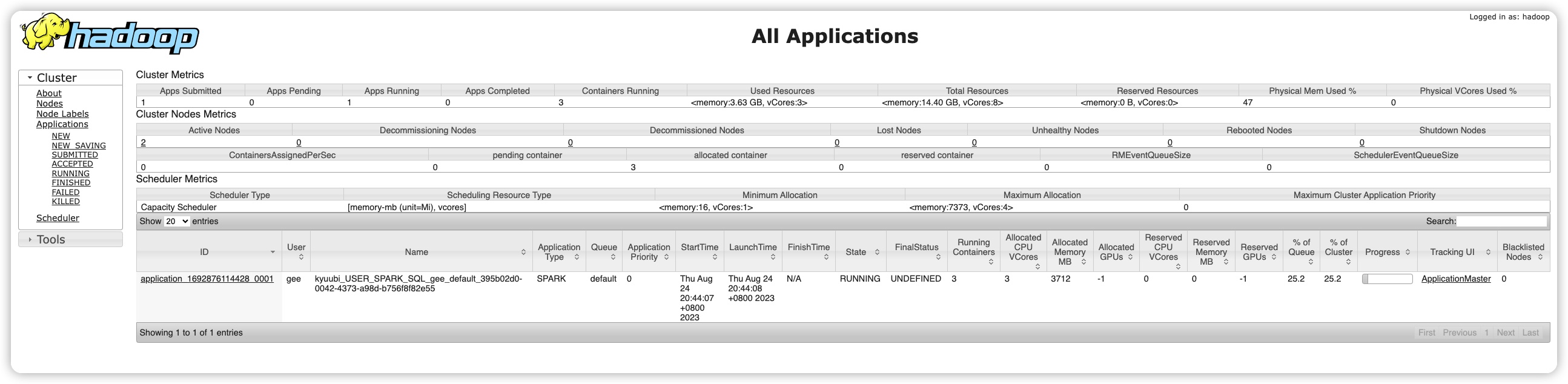

yarn application里, 这个kyubbi的beeline连接被识别为spark任务, 并且用户信息也准确识别了.

ranger 取消hive权限

hive beeline 报错

0: jdbc:hive2://172.16.16.14:7001/default> select * from sparksql_test4;

Error: Error while compiling statement: FAILED: HiveAccessControlException Permission denied: user [gee] does not have [SELECT] privilege on [default/sparksql_test4/*] (state=42000,code=40000)

0: jdbc:hive2://172.16.16.14:7001/default> select current_user();

+------+

| _c0 |

+------+

| gee |

+------+

1 row selected (0.566 seconds)

kyuubi仍然能够读取

0: jdbc:hive2://172.16.16.14:10009/default> select * from sparksql_test4;

23/08/24 20:55:13 INFO operation.ExecuteStatement: Processing gee's query[2660d8b7-9276-414d-aef5-90d0738452b5]: PENDING_STATE -> RUNNING_STATE, statement:

select * from sparksql_test4

23/08/24 20:55:13 INFO operation.ExecuteStatement: Query[2660d8b7-9276-414d-aef5-90d0738452b5] in FINISHED_STATE

23/08/24 20:55:13 INFO operation.ExecuteStatement: Processing gee's query[2660d8b7-9276-414d-aef5-90d0738452b5]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.08 seconds

+----+----+

| a | b |

+----+----+

+----+----+

No rows selected (0.092 seconds)

0: jdbc:hive2://172.16.16.14:10009/default> select current_user();

23/08/24 20:55:31 INFO operation.ExecuteStatement: Processing gee's query[5e3de6bf-d43b-4cad-9839-7cb537d657a2]: PENDING_STATE -> RUNNING_STATE, statement:

select current_user()

23/08/24 20:55:31 INFO operation.ExecuteStatement: Query[5e3de6bf-d43b-4cad-9839-7cb537d657a2] in FINISHED_STATE

23/08/24 20:55:31 INFO operation.ExecuteStatement: Processing gee's query[5e3de6bf-d43b-4cad-9839-7cb537d657a2]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.107 seconds

+-----------------+

| current_user() |

+-----------------+

| gee |

+-----------------+

1 row selected (0.141 seconds)

ranger 取消hdfs权限, kyuubi终于报错

所以文档是对的, kyuubi 支持多租户, 默认开启了 Storage-based Authorization

https://kyuubi.readthedocs.io/en/v1.7.0/security/authorization/spark/overview.html

0: jdbc:hive2://172.16.16.14:10009/default> select * from sparksql_test4;

23/08/24 20:56:51 INFO operation.ExecuteStatement: Processing gee's query[8173a427-2ba3-4f57-a258-9b726b2761d5]: PENDING_STATE -> RUNNING_STATE, statement:

select * from sparksql_test4

2023-08-24 20:56:51,542 [WARN] [GetFileInfo #0] DFSClient: [Schema: hdfs://172.16.16.14:4007] Got org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException

23/08/24 20:56:51 INFO operation.ExecuteStatement: Query[8173a427-2ba3-4f57-a258-9b726b2761d5] in ERROR_STATE

23/08/24 20:56:51 INFO operation.ExecuteStatement: Processing gee's query[8173a427-2ba3-4f57-a258-9b726b2761d5]: RUNNING_STATE -> ERROR_STATE, time taken: 0.069 seconds

Error: org.apache.kyuubi.KyuubiSQLException: org.apache.kyuubi.KyuubiSQLException: Error operating ExecuteStatement: org.apache.hadoop.ipc.RemoteException(org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException): Permission denied: user=gee, access=EXECUTE, inode="/usr/hive/warehouse/sparksql_test4"

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkRangerPermission(RangerHdfsAuthorizer.java:501)

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:248)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:225)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:672)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1863)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1881)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.resolvePath(FSDirectory.java:694)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:112)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3215)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1294)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:830)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:529)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1039)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:963)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2065)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3047)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1581)

at org.apache.hadoop.ipc.Client.call(Client.java:1527)

at org.apache.hadoop.ipc.Client.call(Client.java:1424)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118)

at com.sun.proxy.$Proxy26.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:819)

at sun.reflect.GeneratedMethodAccessor4.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:424)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy27.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1819)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1627)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1624)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1639)

at org.apache.hadoop.fs.Globber.getFileStatus(Globber.java:65)

at org.apache.hadoop.fs.Globber.doGlob(Globber.java:281)

at org.apache.hadoop.fs.Globber.glob(Globber.java:149)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:2091)

at org.apache.hadoop.mapred.LocatedFileStatusFetcher$ProcessInitialInputPathCallable.call(LocatedFileStatusFetcher.java:312)

at org.apache.hadoop.mapred.LocatedFileStatusFetcher$ProcessInitialInputPathCallable.call(LocatedFileStatusFetcher.java:293)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:57)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

at org.apache.kyuubi.KyuubiSQLException$.apply(KyuubiSQLException.scala:69)

at org.apache.kyuubi.engine.spark.operation.SparkOperation$$anonfun$onError$1.applyOrElse(SparkOperation.scala:188)

at org.apache.kyuubi.engine.spark.operation.SparkOperation$$anonfun$onError$1.applyOrElse(SparkOperation.scala:172)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.kyuubi.engine.spark.operation.ExecuteStatement.$anonfun$executeStatement$1(ExecuteStatement.scala:90)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.kyuubi.engine.spark.operation.SparkOperation.$anonfun$withLocalProperties$1(SparkOperation.scala:155)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:169)

at org.apache.kyuubi.engine.spark.operation.SparkOperation.withLocalProperties(SparkOperation.scala:139)

at org.apache.kyuubi.engine.spark.operation.ExecuteStatement.executeStatement(ExecuteStatement.scala:80)

at org.apache.kyuubi.engine.spark.operation.ExecuteStatement$$anon$1.run(ExecuteStatement.scala:102)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.ranger.authorization.hadoop.exceptions.RangerAccessControlException): Permission denied: user=gee, access=EXECUTE, inode="/usr/hive/warehouse/sparksql_test4"

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkRangerPermission(RangerHdfsAuthorizer.java:501)

at org.apache.ranger.authorization.hadoop.RangerHdfsAuthorizer$RangerAccessControlEnforcer.checkPermission(RangerHdfsAuthorizer.java:248)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:225)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:672)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1863)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkTraverse(FSDirectory.java:1881)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.resolvePath(FSDirectory.java:694)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:112)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3215)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1294)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:830)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:529)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1039)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:963)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:2065)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3047)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1581)

at org.apache.hadoop.ipc.Client.call(Client.java:1527)

at org.apache.hadoop.ipc.Client.call(Client.java:1424)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118)

at com.sun.proxy.$Proxy26.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:819)

at sun.reflect.GeneratedMethodAccessor4.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:424)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy27.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1819)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1627)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1624)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1639)

at org.apache.hadoop.fs.Globber.getFileStatus(Globber.java:65)

at org.apache.hadoop.fs.Globber.doGlob(Globber.java:281)

at org.apache.hadoop.fs.Globber.glob(Globber.java:149)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:2091)

at org.apache.hadoop.mapred.LocatedFileStatusFetcher$ProcessInitialInputPathCallable.call(LocatedFileStatusFetcher.java:312)

at org.apache.hadoop.mapred.LocatedFileStatusFetcher$ProcessInitialInputPathCallable.call(LocatedFileStatusFetcher.java:293)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:57)

at org.apache.hadoop.shaded.com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78)

... 3 more

at org.apache.kyuubi.KyuubiSQLException$.apply(KyuubiSQLException.scala:69)

at org.apache.kyuubi.operation.ExecuteStatement.waitStatementComplete(ExecuteStatement.scala:129)

at org.apache.kyuubi.operation.ExecuteStatement.$anonfun$runInternal$1(ExecuteStatement.scala:161)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.

sparksql authz ranger

mvn clean package -pl :kyuubi-spark-authz_2.12 -DskipTests -Dspark.version=3.3.2 -Dranger.version=2.3.0

[root@VM-152-104-centos ~/projects/kyuubi/extensions/spark/kyuubi-spark-authz/target]# ls -alh

total 516K

drwxr-xr-x 11 root root 4.0K Aug 24 21:30 .

drwxr-xr-x 4 root root 4.0K Aug 24 21:29 ..

-rw-r--r-- 1 root root 30 Aug 24 21:29 .plxarc

drwxr-xr-x 2 root root 4.0K Aug 24 21:29 analysis

drwxr-xr-x 2 root root 4.0K Aug 24 21:29 antrun

drwxr-xr-x 3 root root 4.0K Aug 24 21:29 generated-sources

drwxr-xr-x 3 root root 4.0K Aug 24 21:29 generated-test-sources

-rw-r--r-- 1 root root 465K Aug 24 21:30 kyuubi-spark-authz_2.12-1.8.0-SNAPSHOT.jar

drwxr-xr-x 2 root root 4.0K Aug 24 21:30 maven-archiver

drwxr-xr-x 3 root root 4.0K Aug 24 21:29 maven-shared-archive-resources

drwxr-xr-x 3 root root 4.0K Aug 24 21:29 maven-status

drwxr-xr-x 5 root root 4.0K Aug 24 21:30 scala-2.12

drwxr-xr-x 2 root root 4.0K Aug 24 21:29 tmp

[root@VM-152-104-centos ~/projects/kyuubi/extensions/spark/kyuubi-spark-authz/target]# ls scala-2.12/

classes jars test-classes

[root@VM-152-104-centos ~/projects/kyuubi/extensions/spark/kyuubi-spark-authz/target]# ls scala-2.12/jars/

gethostname4j-1.0.0.jar jetty-client-9.4.51.v20230217.jar jna-5.7.0.jar ranger-plugins-audit-2.3.0.jar

jackson-jaxrs-1.9.13.jar jetty-http-9.4.51.v20230217.jar jna-platform-5.7.0.jar ranger-plugins-common-2.3.0.jar

jersey-client-1.19.4.jar jetty-io-9.4.51.v20230217.jar kyuubi-util-1.8.0-SNAPSHOT.jar ranger-plugins-cred-2.3.0.jar

jersey-core-1.19.4.jar jetty-util-9.4.51.v20230217.jar kyuubi-util-scala_2.12-1.8.0-SNAPSHOT.jar